By Elke Porter | WBN Ai | June 25, 2025

Subscription to WBN and being a Writer is FREE!

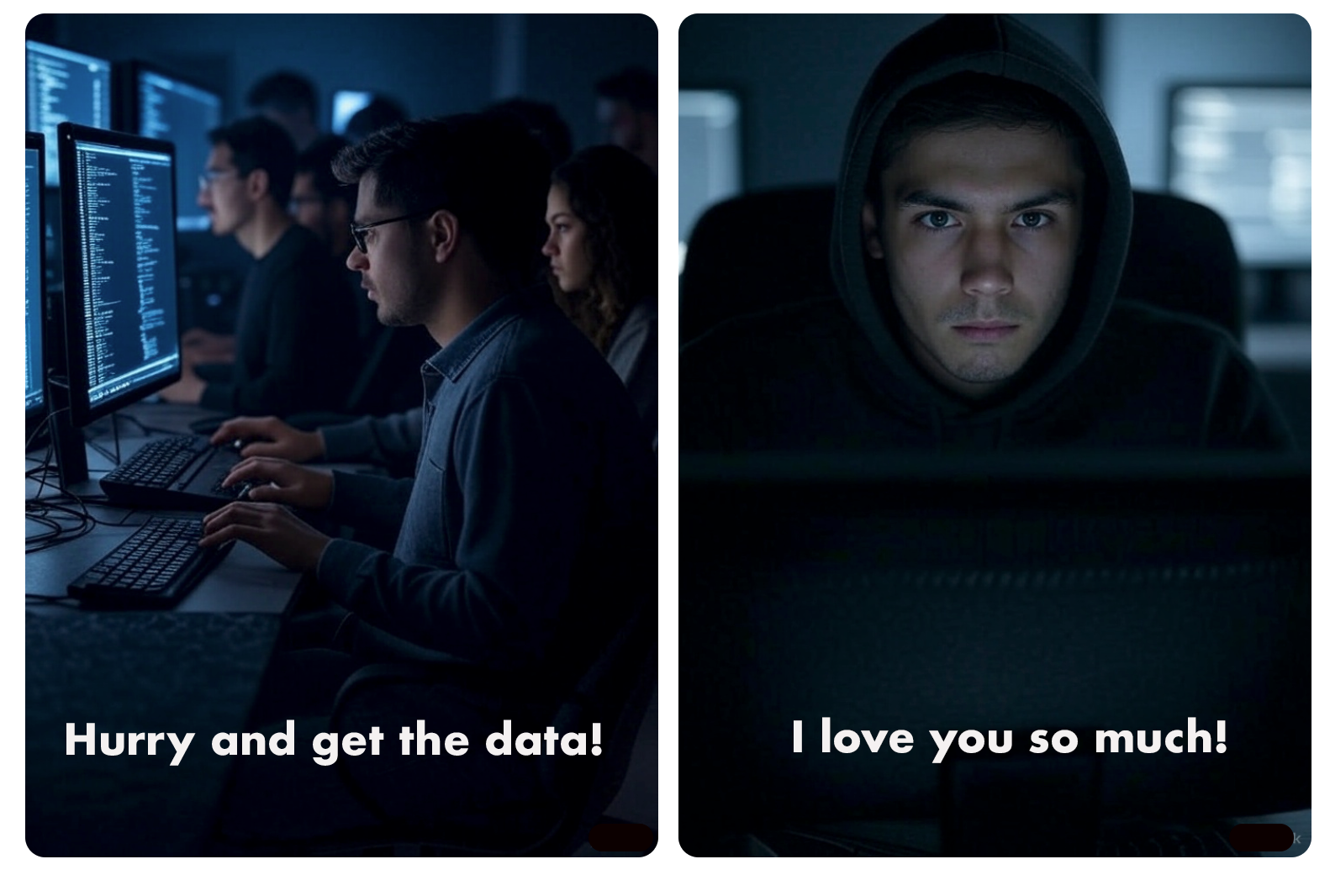

As the goldrush of AI tools accelerates, a shadowy force is rising in parallel—AI hackers. These aren’t your stereotypical hoodie-wearing loners. Today’s digital bandits are highly organized, well-funded, and AI-enhanced themselves. Their prize? Data. And not just any data—your data. Personal, emotional, intimate… data that’s fast becoming the new gold.

Every conversation you have with an AI tool leaves a trace. Millions of teenagers are turning to chatbots for emotional support, discussing loneliness, bullying, and even relationships with AI “boyfriends.” What’s being shared in these chats is raw, deeply personal, and often unfiltered. For hackers, this is a treasure trove—data that can be sold, manipulated, or used to build even more convincing scams and synthetic identities.

Unlike traditional websites that rely on centralized databases and user logins, AI platforms deal in high-volume, high-sensitivity interaction logs. Many users are unaware that unless explicitly stated, their data may be used to further train models, stored in logs, or shared with third-party services. The more we open up to these tools, the more of our “digital essence” we release—tiny code fragments that, together, paint a detailed picture of our personality, preferences, and vulnerabilities.

Storing this data safely isn’t just about encryption. It requires massive energy resources, multi-layered firewalls, secure cloud protocols, and constant monitoring. Most startups in the AI space don’t have the resources or expertise to manage this level of cybersecurity, making them ripe targets for attacks.

So, how are we protecting ourselves? Big players like OpenAI, Google, and Anthropic invest heavily in security audits and compliance frameworks like SOC 2 and ISO 27001. But smaller, fast-moving companies in the AI goldrush often skip those steps in the race to market.

The real danger? We’re handing over our most personal truths to systems we barely understand—systems that, if breached, could expose not just our data, but our selves.

In the age of AI intimacy, security isn't optional—it's existential. Think twice before telling your AI everything. Because someone, somewhere, may already be listening.

TAGS: #AI Privacy #Data Security #Digital Gold #AI Hackers #AI Ethics #Cyber Risk #WBN AI Edition #Elke Porter #WBN News Vancouver

Connect with Elke at Westcoast German Media or on LinkedIn: Elke Porter or contact her on WhatsApp: +1 604 828 8788. Public Relations. Communications. Education.