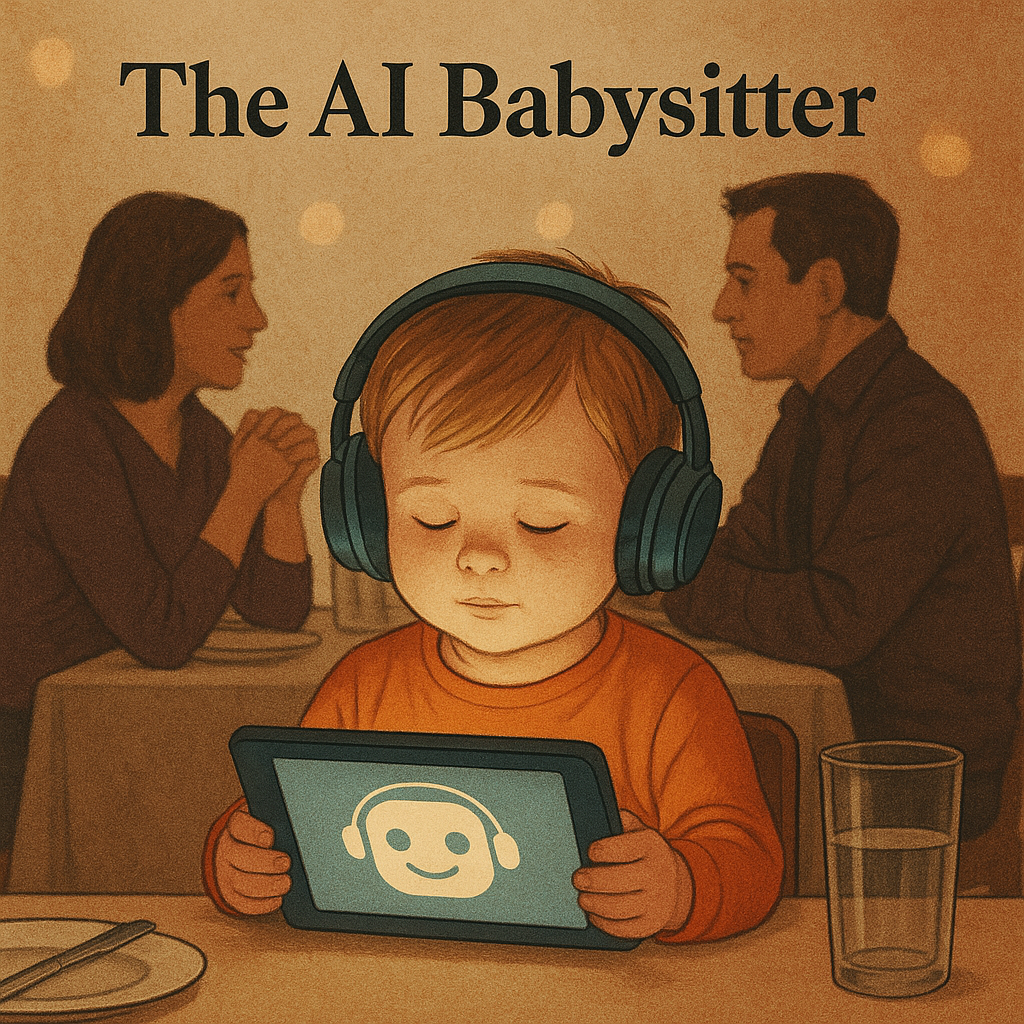

When AI is a Babysitter

There’s a modern ritual that almost every parent performs, often without a second thought. You’re at a restaurant, trying to talk, the child grows restless, and the tablet slides across the table like a pacifier made of pixels.