Why American AI Ambition Can Turn into European Legal Exposure

There’s a thin line between genius and violation.

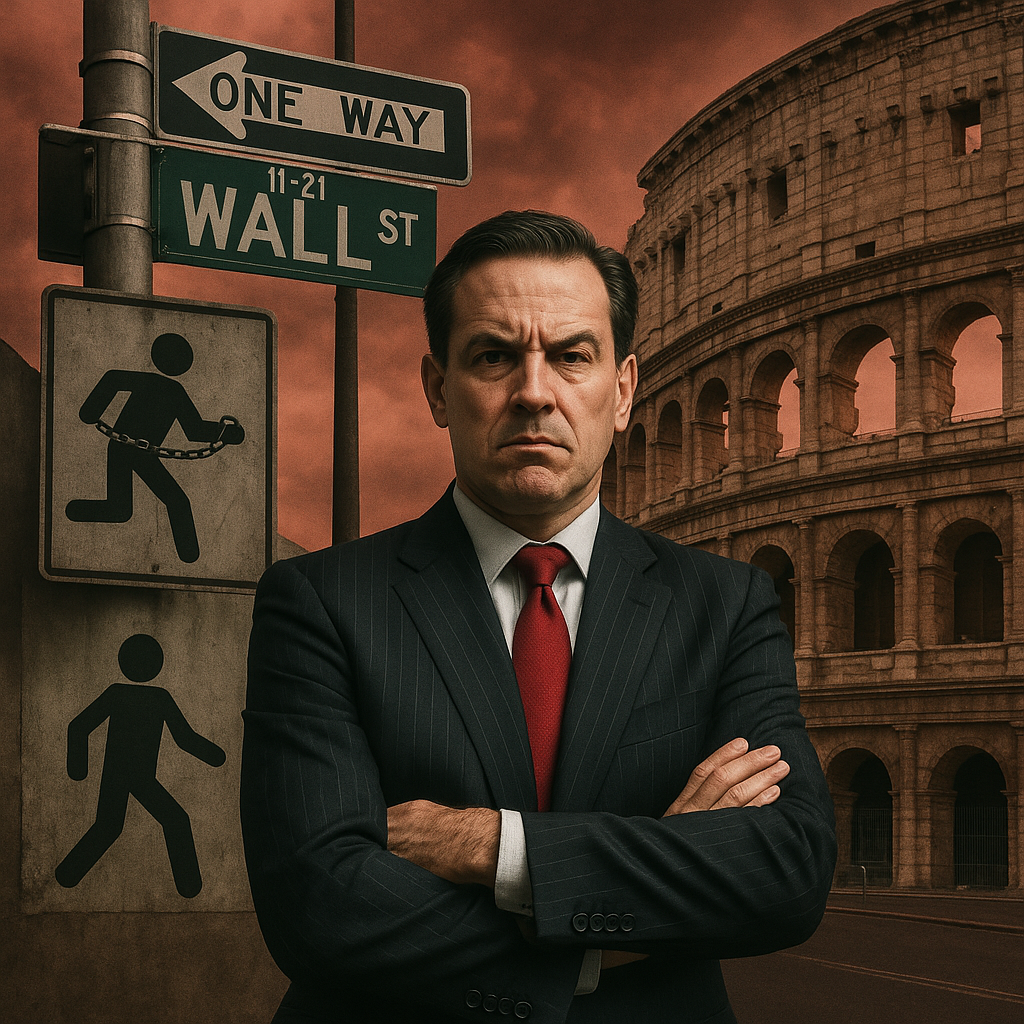

In New York, a CEO who pushes AI innovation is a visionary.

In Rome, under the EU and Italian legal lens, that same CEO might be a defendant.

Let’s be clear: the Artificial Intelligence Act and the GDPR are not optional reading materials — they’re a compliance minefield that now stretches far beyond Europe’s borders.

Picture this: a boutique hotel in Italy proudly announces its “AI concierge” — a friendly chatbot that chats with guests, recommends restaurants, remembers preferences, and learns from conversations.

It sounds harmless. Until regulators knock on the door.

Because that chatbot:

- Processes personal data (names, emails, booking history, emotions, habits, but also allergies or special needs).

- Learns and profiles users through algorithmic adaptation.

- Operates without clear human oversight.

- Fails to say it’s an AI — violating transparency obligations under both GDPR and the AI Act.

In short: what looked like customer care turns into a compliance nightmare.

The Replika (Luka Inc.) Case: A Warning Shot

Earlier this year, Italy’s Data Protection Authority fined Luka Inc., creator of the AI chatbot Replika, €5 million — and the company isn’t even based in Europe.

Why?

Because Replika interacted with Italian users, collecting sensitive data, while offering no valid legal basis, no age verification, and an unclear privacy policy (available only in English).

That was enough.

Europe’s message was simple: if your AI talks to Europeans, you’re in the game — and you’d better play by the rules. And Italian's are even stronger.

The Luka case has become a global precedent. It proves that “move fast and break things” doesn’t work when what you’re breaking are privacy, dignity, and fundamental rights.

Europe’s Legal Arsenal: GDPR + AI Act + Italian AI Law

Here’s the new rulebook for anyone building or deploying AI systems that might touch European users:

- GDPR – still the global benchmark for personal data protection. Legal basis, transparency, data minimization, and individual rights are non-negotiable.

- AI Act (Reg. EU 2024/1689) – the world’s first comprehensive AI regulation. It classifies AI systems by risk level. Even “limited-risk” tools like chatbots must inform users that they are speaking with an AI.

- Italy’s National AI Law (Law 132/2025) – in force since October 2025. It adds local obligations: transparency, human oversight, child protection, and respect for human dignity.

Together, they form a regulatory trident — and ignoring it can sink even the boldest tech ship. Violations can turn in civil cases, class actions, eventually crimes.

What happened to Luka Inc. could happen to anyone — from a Tuscan resort to a Silicon Valley unicorn.

A U.S. CEO may think: “Our servers are in Texas, our company is Delaware-based.”

But if one user from Milan, Berlin, or Paris interacts with your chatbot, you’re already under European jurisdiction.

And while you’re checking that, ask yourself one more question:

“Could the data I used to train or fine-tune my AI include European or Italian citizens?”

Because if your model learned from EU data — even indirectly — you may already be processing personal information protected by GDPR.

That means obligations: lawful basis, data mapping, documentation, impact assessment, and yes — potential sanctions if things go wrong.

Fail any of these, and congratulations: you’ve just opened a transatlantic legal front.

The U.S. celebrates disruption; Europe regulates it.

But that doesn’t mean Europe is anti-innovation.

It means Europe is writing the moral code of the algorithmic age, while others still argue about syntax.

A CEO who processes data for AI without understanding these laws isn’t bold — he’s blind.

And in a world where trust is currency, compliance has become the new venture capital.

The next time your company launches an AI feature — chatbot, recommender, voice assistant — ask yourself:

“Could this reach a European user?”

“Could my training data include European citizens?”

If the answer is yes, start reading like a lawyer, not just coding like a visionary.

Because what looks like innovation on Wall Street may look like a criminal omission on Via Veneto — where La Dolce Vita meets the Data Protection Authority.

TAGS: #AI Compliance, #GDPR Ready, #Artificial Intelligence Act, #Tech Regulation, #Global Data Laws, #Cross-Border Risk

Gianni Dell’Aiuto is an Italian attorney with over 35 years of experience in legal risk management, data protection, and digital ethics. Based in Rome and proudly Tuscan, he advises businesses globally on regulations like the GDPR, AI Act, and NIS2. An author and frequent commentator on legal innovation, he helps companies turn compliance into a competitive edge while promoting digital responsibility. Click here to connect with him.

Editor: Wendy S Huffman

SOURCE LISTING:

Written by Gianni Dell'Aiuto. Originally published material, adapted for WBN News.