AI Privacy Laws: Protecting the Newest Endangered Species

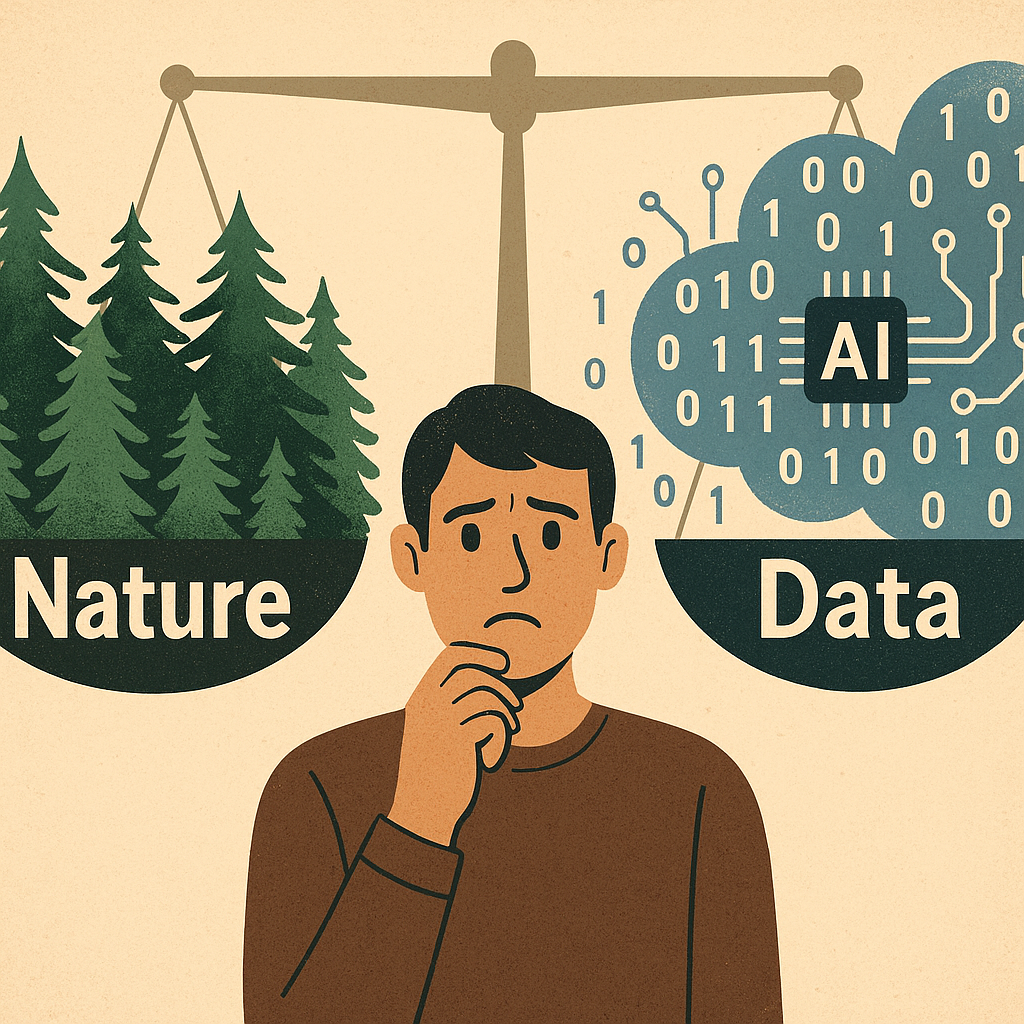

Let's compares the EU AI Act’s transparency, accountability, and sustainability pillars to environmental protection, emphasizing that explainable AI is vital for digital trust and human rights—not red tape but a survival strategy in the data-driven era.

-

2 min read